Data Science, Machine Learning, Natural Language Processing, Text Analysis, Recommendation Engine, R, Python

Saturday, 30 June 2018

Geek Trivia: The Bird Of Prey With The Largest Natural Geographic Range Is The?

7 Awesome Ultralight Blankets For Air Travel, Road Trips, And More

Whether you’re traveling a short distance or on a long haul trip, you want to be comfortable, right?

Click Here to Continue Reading

How to Get Started with a Standing Desk

Bitcoin bloodbath nears dot-com levels as many tokens go to zero

Play Robot Odyssey, Which Inspired a Generation of Programmers, Right Now in Your Browser

Install Light Switch Guards to Keep People from Turning Off Your Smart Bulbs

Friday, 29 June 2018

Geek Trivia: The Real World Company Located In Terminator 2’s Cyberdyne Systems Building Specializes In?

You Can Now Add Music to Your Instagram Stories

Watch World Cup Scores from the Terminal

Can You Use FaceTime on Android?

DataWorks Summit Launches Demopalooza with a Bang

This blog post was originally published on the DataWorks Summit Blog. One of the most exciting additions to DataWorks Summit was a new event called Demopalooza. Now, I might be slightly biased as one of the hosts, but it sure seemed like attendees really enjoyed themselves. There could be few reasons for this, not least of which […]

The post DataWorks Summit Launches Demopalooza with a Bang appeared first on Hortonworks.

What is “dasd” and Why is it Running on my Mac?

The Best USB Microphones

A good USB microphone is a fantastic way to record studio-quality sound on your computer without investing in a full studio setup.

Click Here to Continue Reading

How to Return Audible Audiobooks

What is Cryptojacking, and How Can You Protect Yourself?

Thursday, 28 June 2018

Geek Trivia: The United States Postal Service Printed Billions Of Stamps With The Wrong Image Of?

BenQ ScreenBar Review: The Perfect Computer Desk Lamp

Claiming something is the perfect computer desk lamp is a rather, well, bold claim.

Click Here to Continue Reading

Apple’s Terrible Keyboards and Why Repairability Matters

Use Auto-Replace to Sound More Assertive

How To Recover Your Forgotten Instagram Password

AT&T Nearly Triples a Fee On Your Bill to Extract Hundreds of Millions From Customers

You probably don’t notice the small “Administrative fee” on your cell phone bill. AT&T is banking on that. Literally.

Click Here to Continue Reading

What is “Microsoft Network Realtime Inspection Service” (NisSrv.exe) and Why Is It Running On My PC?

Increasing Hadoop Storage Scale by 4x!

This is the 8th blog of the Hadoop Blog series (part 1, part 2, part 3, part 4, part 5, part 6, part 7). In this blog, we will discuss how NameNode Federation provides scalability and performance benefits to the cluster. The Apache Hadoop Distributed File System (HDFS) is highly scalable and can support petabyte-sizes […]

The post Increasing Hadoop Storage Scale by 4x! appeared first on Hortonworks.

The Best Pocket-Friendly Multitools For Tasks Big And Small

Full size multitools are great, but they’re normally too big to fit in you…

Click Here to Continue Reading

Why Texted Videos Look Better on iPhone Than Android

How to Use Instapaper in the EU

Wednesday, 27 June 2018

Geek Trivia: In The 1930s There Were Huge Numbers Of Cookbooks Devoted To Cooking With?

Google Maps Just Got Better at Pointing Out Things To Do Nearby

Facebook Wants to Save You From Spoilers With 30-Day Keyword Mute Feature

Between the World Cup and Avengers movies, your Facebook feed is a minefield of spoilers.

Click Here to Continue Reading

AIM Is Up and Running on a Third Party Server, Here’s How to Use It

How to Disable Screen Auto-Rotation in Windows 10

How to Get Your Amazon Prime Discounts at Whole Foods

Amazon’s finished rolling out discounts for Prime members to every Whole Foods in the nation.

Click Here to Continue Reading

How to Make an Apple Store or Genius Bar Appointment

Announcing RStudio and Databricks Integration

At Databricks, we are thrilled to announce the integration of RStudio with the Databricks Unified Analytics Platform. You can try it out now at with this notebook (Rmd | HTML) or visit us at www.databricks.com/rstudio.

For R practitioners looking at scaling out R-based advanced analytics to big data, Databricks provides a Unified Analytics Platform that gets up and running in seconds, integrates with RStudio to provide ease of use, and enables you to automatically run and execute R workloads at unprecedented scale across single or multiple nodes.

Integrating Databricks and RStudio together allows data scientists to address a number of challenges including:

- Increase productivity among your data science teams: Data scientists using R can use their favorite IDE to seamlessly using SparkR or sparklyr to execute jobs on Spark to scale your R-based analytics. At the same time you can get your environment up and running quickly to provide scale without the need for cluster management.

- Simplify access and provide the best possible dataset: R users can get access to the full ETL capabilities of Databricks to provide access to relevant datasets including optimizing data formats, cleaning up data, and joining datasets to provide the perfect dataset for your analytics

- Scale R-based analytics to big data: Move from data science to big data science by scaling up current R-based analysis to the analytics volume based on Apache Spark running on Databricks. At the same time, you can keep costs under control with the auto-scaling of Databricks to automatically scale usage up and down based upon your analytics needs.

Introducing Databricks RStudio Integration

With Databricks RStudio Integration, both popular R packages for interacting with Apache Spark, SparkR or sparklyr can be used the inside the RStudio IDE on Databricks. When multiple users use a cluster, each creates a separate SparkR Context or sparklyr connection, but they are all talking to a single Databricks managed Spark application allowing unique opportunities for collaboration between users. Together, RStudio can take advantage of Databricks’ cluster management and Apache Spark to perform such as a massive model selection as noted in the figure below.

You can run this demo on your own using this k-nearest neighbors (KNN) regression demo (Rmd | HTML).

Next Steps

Our goal is to make R-based analytics easier to use and more scalable with RStudio and Databricks. To dive deeper into the RStudio integration architecture, technical details on how users can access RStudio on Databricks clusters, and examples of the power of distributed computing and the interactivity of RStudio – and to get started today, visit www.databricks.com/rstudio.

--

Try Databricks for free. Get started today.

The post Announcing RStudio and Databricks Integration appeared first on Databricks.

How to Listen to Podcasts on Google Home

How to Protect Your Camera and Lenses from Damage, Dust, and Scratches

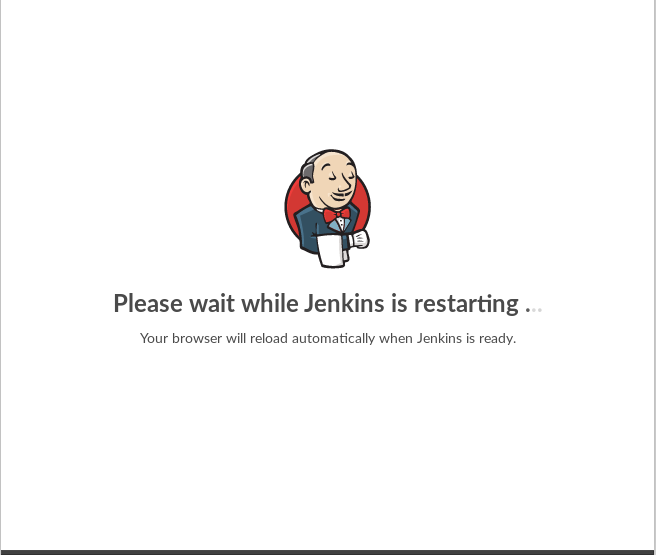

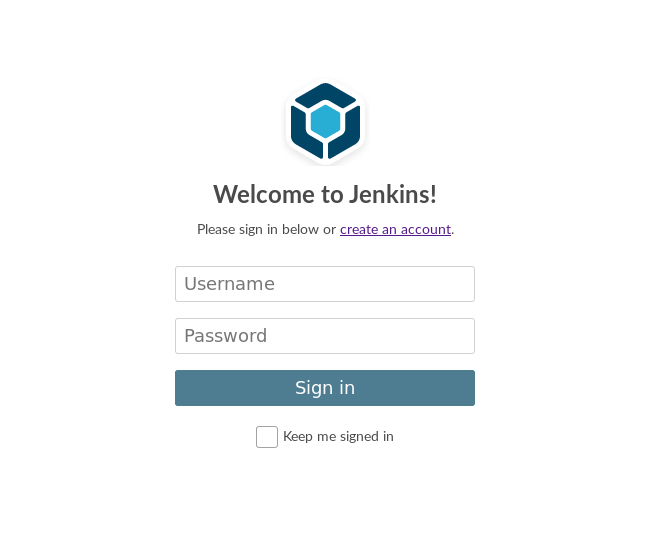

New design, UX and extensibility digest for login page et. al.

This blog post gives an introduction to the new design for the login and signup forms and Jenkins is (re)starting pages introduced in Jenkins 2.128. The first part of the blog post is an introduction to the new design and UX for Jenkins users. The later part is talking about extensibility in a more technical manner, aimed at plugin developers.

Overview

The recent changes to some core pages provide new design and UX and further dropping all external dependencies to prevent any possible malicious javascript introduced by third party libraries. To be clear, this never was an issue with previous releases of Jenkins, but having read this article, this author believes that the article has good points and leading by example may raise awareness of data protection.

This meant to drop the usage of the jelly layout lib (aka xmlns:l="/lib/layout") and as well the page decorators it supported. However there is a new SimplePageDecorator extension point (discussed below) which can be used to modify the look and feel for the login and sign up page.

The following pages have given a new design:

-

Jenkins is (re)starting pages

-

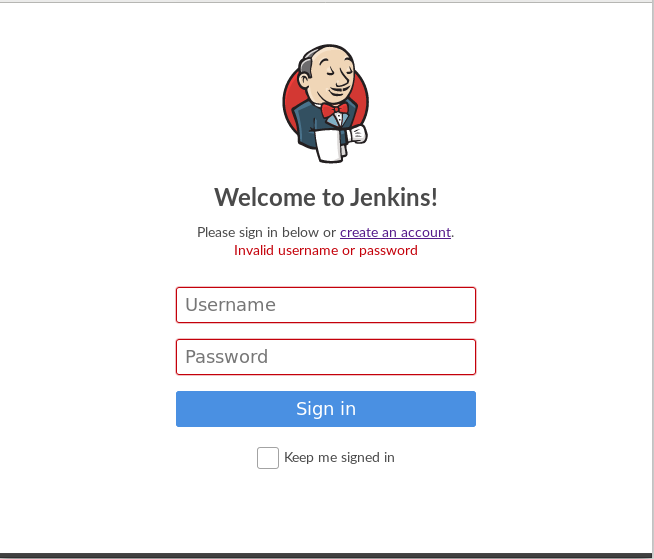

Login

-

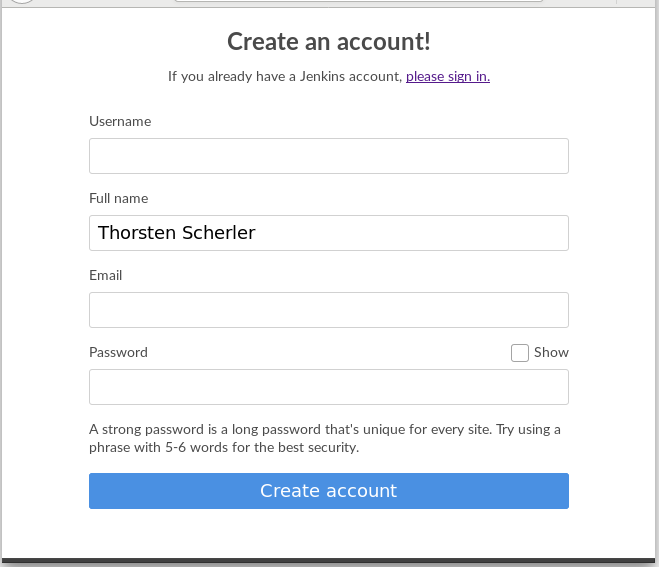

Sign up

UX enhancement

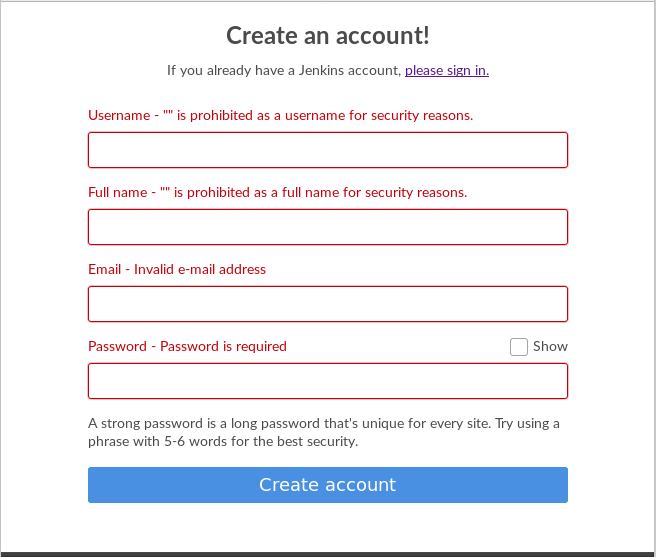

Form validation has changed to give inline feedback about data validation errors in the same form.

-

Login

-

Sign up

The above image shows that the validation is now done on all input fields instead of before breaking on the first error found, which should lead to fewer retry cycles.

Instead of forcing the user to repeat the password, the new UX introduces the possibility to display the password in clear text. Further a basic password strength meter indicates password strength to the user while she enters the password.

Customizing the UI

| The re-/starting screens do not support the concept of decorators very well, hence the decision to not support them for these pages. |

The SimplePageDecorator is the key component for customization and uses three different files to allow overriding the look and feel of the login and signup pages.

-

simple-head.jelly -

simple-header.jelly -

simple-footer.jelly

All of the above SimplePageDecorator Jelly files are supported in the login page. The following snippet is a minimal excerpt of the login page, showing how it makes use of SimplePageDecorator.

<?jelly escape-by-default='true'?>

<j:jelly xmlns:j="jelly:core" xmlns:st="jelly:stapler" >

<j:new var="h" className="hudson.Functions"/>

<html>

<head>

<!-- css styling, will fallback to default implementation -->

<st:include it="${h.simpleDecorator}" page="simple-head.jelly" optional="true"/>

</head>

<body>

<div class="simple-page" role="main">

<st:include it="${h.simpleDecorator}" page="simple-header.jelly" optional="true"/>

</div>

<div class="footer">

<st:include it="${h.simpleDecorator}" page="simple-footer.jelly" optional="true"/>

</div>

</body>

</html>

</j:jelly>

The sign-up page only supports the simple-head.jelly:

<?jelly escape-by-default='true'?>

<j:jelly xmlns:j="jelly:core" xmlns:st="jelly:stapler" >

<j:new var="h" className="hudson.Functions"/>

<html>

<head>

<!-- css styling, will fallback to default implementation -->

<st:include it="${h.simpleDecorator}" page="simple-head.jelly" optional="true"/>

</head>

</html>

</j:jelly>

SimplePageDecorator - custom implementations

| Have a look at Login Theme Plugin (currently unreleased), which allows you to configure your own custom content to be injected into the new login/sign-up page. |

To allow easy customisation the decorator only implements one instance by the principal "first-come-first-serve". If jenkins finds an extension of the SimplePageDecorator it will use the Jelly files provided by that plugin. Otherwise Jenkins will fall back to the default implementation.

@Extension

public class MySimplePageDecorator extends SimplePageDecorator {

public String getProductName() {

return "MyJenkins";

}

}

The above will take override over the default because the default implementation has a very low ordinal (@Extension(ordinal=-9999)) If you have competing plugins implementing SimplePageDecorator, the implementation with the highest ordinal will be used. |

As a simple example, to customize the logo we display in the login page, create a simple-head.jelly with the following content:

<?jelly escape-by-default='true'?>

<j:jelly xmlns:j="jelly:core">

<link rel="stylesheet" href="${resURL}/css/simple-page.css" type="text/css" />

<link rel="stylesheet" href="${resURL}/css/simple-page.theme.css" type="text/css" />

<style>

.simple-page .logo {

background-image: url('${resURL}/plugin/YOUR_PLUGIN/icons/my.svg');

background-repeat: no-repeat;

background-position: 50% 0;

height: 130px;

}

</style>

<link rel="stylesheet" href="${resURL}/css/simple-page-forms.css" type="text/css" />

</j:jelly>

To customize the login page further, create a simple-header.jelly like this:

<?jelly escape-by-default='true'?>

<j:jelly xmlns:j="jelly:core">

<div id="loginIntro">

<div class="logo"> </div>

<h1 id="productName">Welcome to ${it.productName}!</h1>

</div>

</j:jelly>

For example, I used this technique to create a prototype of a login page for a CloudBees product I am working on:

Conclusion

We hope you like the recent changes to some core pages and as well the new design and UX. We further hope you feel enabled to customize the look and feel to adopt your needs with the SimplePageDecorator.

Tuesday, 26 June 2018

Highlights from first expanded Spark + AI Summit

Keynotes show how Unified Analytics (Data + AI) is accelerating innovation

Databricks hosted the first expanded Spark + AI Summit (formerly Spark Summit) at Moscone Center in San Francisco just a couple of weeks ago and the conference drew over 4,000 Apache Spark and machine learning enthusiasts. The overall theme of unifying data + AI technologies and unifying data science + engineering organizations to accelerate innovation resonated in many sessions across Spark + AI Summit 2018, including the keynotes and more than 200 technical sessions on big data and machine learning.

Here are a few highlights from the keynotes:

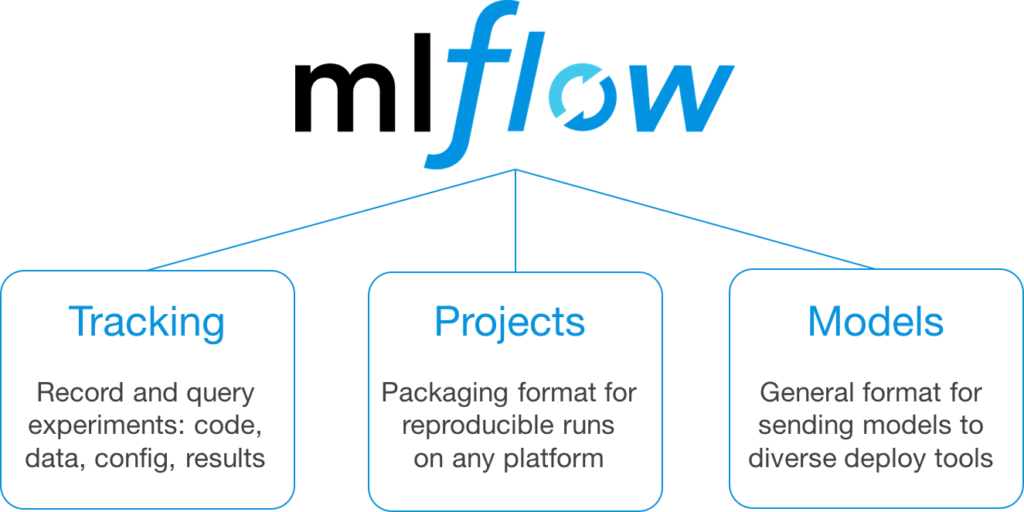

Matei Zaharia, the original creator of Apache Spark and co-founder of Databricks, introduced MLflow – a new open source machine learning platform to address the challenges of tracking experiments, reproducing results, and deploying models on cloud and on-premise infrastructures.

Dominique Brezinski, principal engineer at Apple, discussed the emerging data and analytics challenges in the world of security monitoring and threat response with the growing volumes of log and telemetry data. Michael Armbrust, the creator of Databricks Delta, joined Dom on stage to showcase the fruits of collaboration with Dom’s team – a stable and optimized platform for Unified Analytics that allows the security team to focus on analyzing real-time data correlated with historical data spread across years using streaming, SQL, graph, and ML.

Ali Ghodsi, co-founder, and CEO of Databricks, discussed the power of Unified Analytics and key innovations from Databricks (Databricks Delta & Databricks Runtime for ML ) to tackle data science and engineering challenges. In this keynote, Ali covers the story of the inception of the Spark project at the University of California Berkeley and how Databricks has continued to simplify and drive the adoption of Apache Spark in the enterprise with its Unified Analytics platform. He also highlights Databricks’ expanded capabilities for deep learning frameworks such as TensorFlow.

Reynold Xin, co-founder and chief architect at Databricks, kicked off the Summit and presented Project Hydrogen, a development proposal to efficiently integrate the Spark execution engine with popular machine learning and deep learning frameworks.

Ion Stoica, co-founder and Executive Chairman at Databricks, along with Frank Austin Nothaft, Genomics Lead at Databricks, introduced the Databricks Unified Analytics Platform for Genomics. With this unified platform for genomic data processing, tertiary analytics, and machine learning at massive scale, healthcare and life sciences organizations can accelerate the discovery of life-changing treatments and further advancements in personalized and preventative care.

Dive deeper with the technical session videos:

If you are interested in going deeper on a range of data and machine learning topics, the technical sessions from all of the tracks (Deep Learning Techniques, Productionizing ML, Python and Advanced Analytics, Enterprise Use Cases, Hardware in the Cloud and more), you can find the session videos here.

--

Try Databricks for free. Get started today.

The post Highlights from first expanded Spark + AI Summit appeared first on Databricks.

The 7 Best Budget Office Chairs For Every Need

Having a comfortable and practical office setup is vital to your productivity levels.

Click Here to Continue Reading

Firefox Will Soon Tell You When You’ve Been Pwned

IBM taps emotions for Wimbledon highlights

The Best Free Video Editing Apps for Windows

How to Enable and Use Continued Conversation on Google Home

Best iPad Mounts for Every Purpose

iPads are true general use computers. Some people use them just for work while for others, they’re purely for play.

Click Here to Continue Reading

What’s the Difference Between Canon’s Regular and L-Series Lenses and Which Should You Buy

Jeff Bezos heats up space race against Musk, aims Blue Origin commercial flight next year

How to Install the iOS 12 Beta on Your iPhone or iPad

How to Back Up Your iPhone With iTunes (and When You Should)

Monday, 25 June 2018

Geek Trivia: The Colors Of The Rainbow Were Identified And Set As They Are Now By?

The Best In-Wall Smart Light Switches

If smart bulbs aren’t your thing (although we tend to love them), then smart light switches might be more up your alley.

Click Here to Continue Reading

No Commercial Interruptions: Why The World Cup Feels More Modern Than the NFL in the Netflix Age

Edge Will Aggressively Block Ads on Android and iPhone

What Is An ISO File (And How Do I Use Them)?

How Artificial Intelligence in Healthcare Can Improve Patient Outcomes

Here's how using artificial intelligence in healthcare can help overworked physicians spot existing problems and predict future ones.

The post How Artificial Intelligence in Healthcare Can Improve Patient Outcomes appeared first on Hortonworks.

How to Rotate Your PC’s Screen (or Fix a Sideways Screen)

The Best Couch Co-Op Games For the Xbox One

Sometimes you just want to chill with the competition and play a game together.

Click Here to Continue Reading

How to Round Off Decimal Values in Excel

How to Fix a PC Stuck on “Don’t Turn Off” During Windows Updates

Sunday, 24 June 2018

Geek Trivia: Daniel Stern, Best Known For The Role Of Marv In “Home Alone”, Was Also The Narrator Of?

The 5 Best Handheld Vacuums For Cleaning Up Quick Spills

Vacuum cleaner options are vast and wonderful. You can buy a heavy bag on wheels, a stick vacuum cleaner, or something even smaller than that.

Click Here to Continue Reading

How to Archive Your Data (Virtually) Forever

Saturday, 23 June 2018

Geek Trivia: The Largest Library Of Inks In The World Belongs To?

8 Things You Might Not Know About Chromebooks

Friday, 22 June 2018

The Steam Link Is The Best Living Room Companion For PC Gamers

If you’re a PC gamer with even a passing interest in a living room setup, you should grab a Steam Link.

Click Here to Continue Reading

Geek Trivia: The Wallace and Grommet Short Film “A Grand Day Out” Inadvertently, With A Single Joke, Saved Which Of These British Things?

The Best Rugged Flash Storage Drives

People who frequently travel with important or sensitive data need some way to store it—preferably on a medium that can take a beating.

Click Here to Continue Reading

Free Download: Measure Anything Using Your Android Camera

A Retail Use Case of IT Governance. Starring: RBDMS, NoSQL, and Blockchain Technology

Why Every App Pushes Notifications Now, and How to Stop It

What’s the Difference Between the Fire 7, 8, and 10 Tablets?

Amazon’s Fire Tablets offer some of the best bang for your buck you can get in a tablet today, but there are more differences to these ta…

Click Here to Continue Reading

Android Messages for Web: What It Is and How to Use It

What Makes a Cine Lens Different From Regular Lenses?

How “Windows Sonic” Spatial Sound Works

Thursday, 21 June 2018

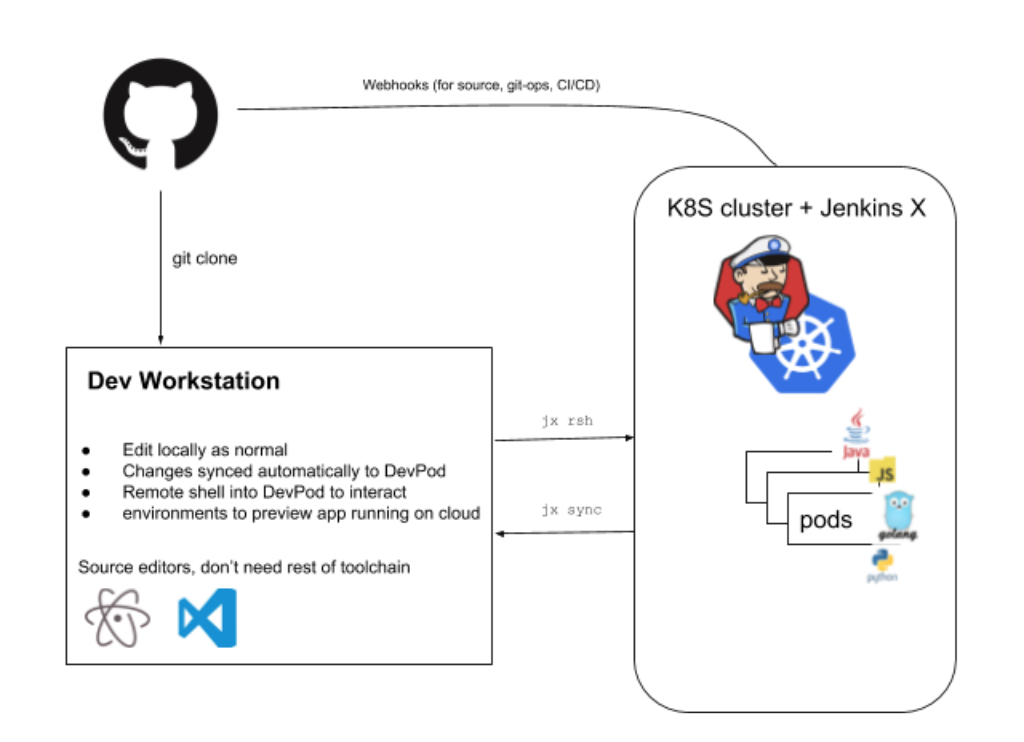

Using Jenkins X DevPods for development

I use macOS day to day, and often struggle to keep my devtools up to date. This isn’t any fault of packaging or tools, more just that I get tired of seeing the beachball:

The demands on dev machines grow, developers are now working across a more diverse set of technologies than just a JVM or a single scripting language these days.

This keeping up to date is a drag on time (and thus money). There are lots of costs involved with development, and I have written about about the machine cost for development (how using something like GKE can be much cheaper than buying a new machine) but there is also the cost of a developer’s time. Thankfully, there are ways to apply the same smarts here to save time as well as money. And time is money, or money is time?

Given all the work done in automating the detection and installation of required tools, environments, and libraries that goes on when you run ‘jx import’ in Jenkins X, it makes sense to also make those available for development time, and the concept of “DevPods” was born.

The pod part of the name comes from the Kubernetes concept of pods (but you don’t have to know about Kubernetes or pods to use Jenkins X. There is a lot to Kubernetes but Jenkins X aims to provide a developer experience that doesn’t require you to understand it).

Why not use Jenkins X from code editing all the way to production, before you even commit the code or open a pull request? All the tools are there, all the environments are there, ready to use (as they are used at CI time!).

This rounds out the picture: Jenkins X aims to deal with the whole lifecycle for you, from ideas/issues, change requests, testing, CI/CD, security and compliance verification, rollout and monitoring. So it totally makes sense to include the actual dev time tools.

If you have an existing project, you can create a DevPod by running (with the jx command):

jx create devpod

This will detect what type of project is (using build packs) and create a DevPod for you with all the tools pre-installed and ready to go.

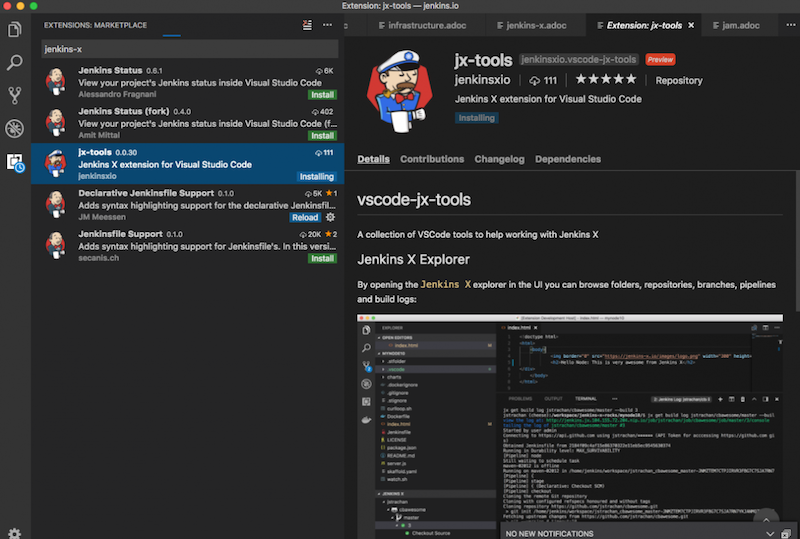

Obviously, at this point you want to be able to make changes to your app and try it out. Either run unit tests in the DevPod, or perhaps see some dev version of the app running in your browser (if it is a web app). Web-based code editors have been a holy grail for some time, but never have quite taken off in the mainstream of developers (despite there being excellent ones out there, most developers prefer to develop on their desktop). Ironically, the current crop of popular editors are based around “electron” which is actually a web technology stack, but it runs locally (Visual Studio Code is my personal favourite at the moment), in fact Visual Studio Code has a Jenkins X extension (but you don’t have to use it):

To get your changes up to the Dev Pod, in a fresh shell run (and leave it running):

jx sync

This will watch for any changes locally (say you want to edit files locally on your desktop) and sync them to the Dev Pod.

Finally, you can have the Dev Pod automatically deploy an “edit” version of the app on every single change you make in your editor:

jx create devpod --sync --reuse

./watch.sh

The first command will create or reuse an existing Dev Pod and open a shell to it, then the watch command will pick up any changes, and deploy them to your “edit” app. You can keep this open in your browser, make a change, and just refresh it. You don’t need to run any dev tools locally, or any manual commands in the Dev Pod to do this, it takes care of that.

You can have many DevPods running (jx get devpods), and you could stop them at the end of the day (jx delete devpod), start them at the beginning, if you like (or as I say: keep them running in the hours between coffee and beer). A pod uses resources on your cluster, and as the Jenkins X project fleshes out its support for dev tools (via things like VS Code extensions) you can expect even these few steps to be automated away in the near future, so many of the above instructions will not be needed!

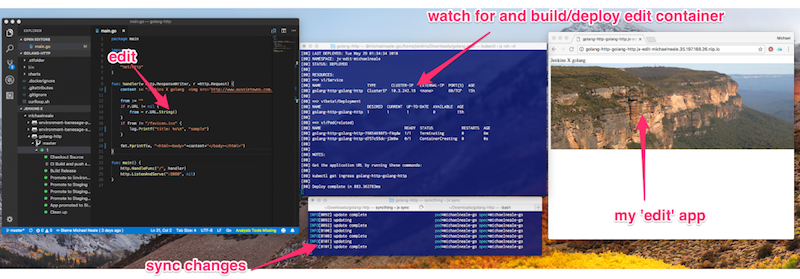

End-to-end experience

So bringing it all together, let me show a very wide (you may need to zoom out) screen shot of this workflow:

From Left to Right:

-

I have my editor (if you look closely, you can see the Jenkins X extension showing the state of apps, pipelines and the environments it is deployed to).

-

In the middle I have jx sync running, pushing changes up to the cloud from the editor, and also the ‘watch’ script running in the DevPod. This means every change I make in my editor, a temporary version of the app (and its dependencies are deployed).

-

On the right is my browser open to the “edit” version of the app. Jenkins X automatically creates an “edit” environment for live changes, so if I make a change to my source on the left, the code is synced, build/tested and updated so I can see the change on the right (but I didn’t build anything locally, it all happens in the DevPod on Jenkins X).

On visual studio code: The Jenkins X extension for visual studio code can automate the creation of devpods and syncing for you. Expect richer support soon for this editor and others.

Explaining things with pictures

To give a big picture of how this hangs together:

In my example, GitHub is still involved, but I don’t push any changes back to it until I am happy with the state of my “edit app” and changes. I run the editor on my local workstation and jx takes care of the rest. This gives a tight feedback loop for changes. Of course, you can use any editor you like, and build and test changes locally (there is no requirement to use DevPods to make use of Jenkins X).

Jenkins X comes with some ready to go environments: development, staging and production (you can add more if you like). These are implemented as Kubernetes namespaces to avoid the wrong app things talking to the wrong place. The development environment is where the dev tools live: and this is also where the DevPods can live! This makes sense as all the tools are available, and saves the hassle of you having slightly different versions of tools on your local workstation than what you are using in your pipeline.

DevPods are an interesting idea, and at the very least a cool name! There will be many more improvements/enhancements in this area, so keep an eye out for them. They are a work in progress, so do check the documentation page for better ways to use them.

Some more reading:

-

Docs on DevPods on jenkins-x.io

-

The Visual Studio Code extension for Jenkins X (what a different world: an open source editor by Microsoft!)

-

James Strachan’s great intro to Jenkins X talk at Devoxx-UK also includes a DevPod demo

The Best Game Controllers for Android

If you’re looking to kill some time with a game on your phone, you don’t have to sit around and tap away on the screen.

Click Here to Continue Reading

Geek Trivia: The Oldest Currency Still In Use Is The?

Spark + AI Summit Europe Agenda Announced

London, as a financial center and cosmopolitan city, has its historical charm, cultural draw, and technical allure for everyone, whether you are an artist, entrepreneur or high-tech engineer. As such, we are excited to announce that London is our next stop for Spark + AI Summit Europe, from October 2-4th, 2018, so prepare yourself for the largest Spark + AI community gathering in EMEA!

Today, we announced our agenda for Spark + AI Summit Europe, with over 100 sessions across 11 tracks, including AI Use Cases, Deep Learning Techniques, Productionizing Machine Learning, and Apache Spark Streaming. Sign up before July 27th for early registration and save £300.00.

While we will announce all our exceptional keynotes soon, we are delighted to have these notable technical visionaries as part of the keynotes: Databricks CEO and Co-founder Ali Ghodsi; Matei Zaharia, the original creator of Apache Spark and Databricks chief technologist; Reynold Xin, Databricks co-founder and chief architect; and Soumith Chintala, creator of PyTorch and AI researcher at Facebook.

Along with these visionary keynotes, our agenda features a stellar lineup of community talks led by engineers, data scientists, researchers, entrepreneurs, and machine learning experts from Facebook, Microsoft, Uber, CERN, IBM, Intel, Redhat, Pinterest and, of course, Databricks. There is also a full day of hands-on Apache Spark and Deep Learning training, with courses for both beginners and advanced users, on both AWS and Azure clouds.

All of the above keynotes and sessions will reinforce the idea that Data + AI and Unified Analytics are an integral part of accelerating innovation. For example, early this month, we had our first expanded Spark + AI Summit at Moscone Center in San Francisco, where over 4,000 Spark and Machine Learning enthusiasts attended, representing over 40 countries and regions. The overall theme of Data + AI as a unifying and driving force of innovation resonated in many sessions, including notable keynotes, as Apache Spark forays into new frontiers because of its capability to unify new data workloads and capacity to process data at scale.

With four new tracks, over 180 sessions, Apache Spark and Deep Learning training on both AWS and Azure Cloud, and myriad community-related events, the San Francisco summit was a huge success and a new experience for many attendees! One attendee notes:

For a moment I thought I entered a music festival and not #SparkAISummit. The lighting and music was on point. pic.twitter.com/o09EHuaXBy

— Neelesh Salian (@NeelS7) June 6, 2018

We want our European attendees to have a similar experience and gain the same knowledge, so make this your moment, keep calm and come to London in October. With an early bird registration, you can save you £300.00.

--

Try Databricks for free. Get started today.

The post Spark + AI Summit Europe Agenda Announced appeared first on Databricks.

AT&T’s New $15/Month TV Service Will Start As an Add-On For New Unlimited Wireless Plans

AT&T is launching a new online TV service for $15/month, undercutting the cheapest offering from Sling.

Click Here to Continue Reading

Your Favorite PC Games Might Be Watching You Play (Just Like Mobile Games Always Have)

How to Create and Work with Multilevel Lists in Microsoft Word

The Best Video Players for Android

How to Use the Ping Command to Test Your Network

How to Fix All of Windows 10’s Annoyances

Wednesday, 20 June 2018

Geek Trivia: Which Of These Coffee Brewing Methods Was Invented To Speed Up Coffee Preparation?

Microsoft News is a Clean App For Browsing and Reading News on Mobile, Offers Dark Mode

Brace Yourself: Video Ads Are Coming to Facebook Messenger

AMC Launches a Better MoviePass Called A-List For $20 Per Month

Today, AMC announced a $20/month theater subscription to rival MoviePass.

Click Here to Continue Reading

How To Recover Your Forgotten Microsoft Account Password

How to Get Started Tracking Social Media Analytics with Free Tools

Ten Gadgets To Take The Stress Out Of Long Flights

Air travel can be…less than pleasant. Doubly so if you have to deal with the American TSA.

Click Here to Continue Reading

What Is A File Extension?

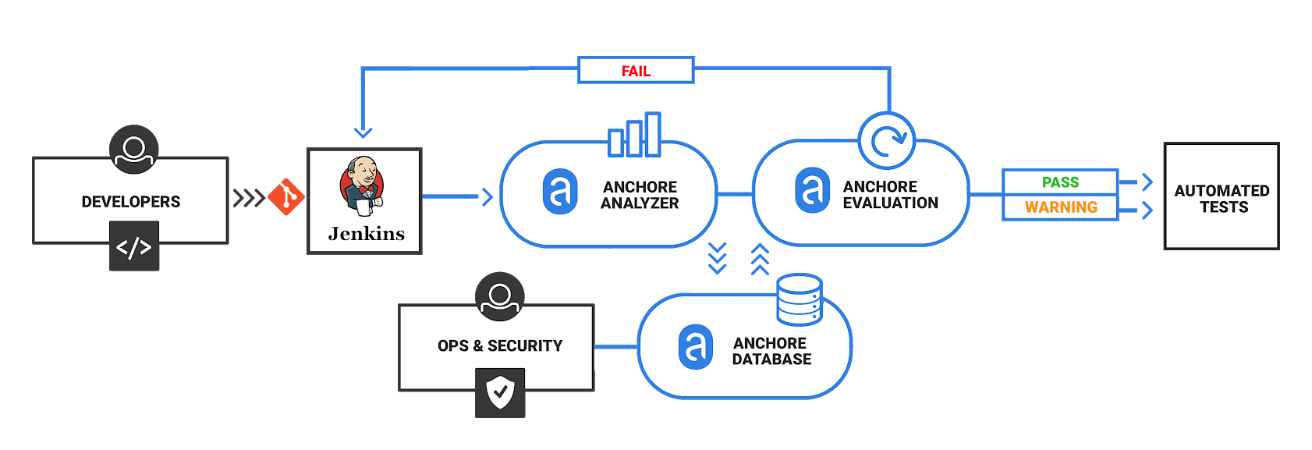

Securing your Jenkins CI/CD Container Pipeline with Anchore (in under 10 minutes)

(adapted from this blog post by Daniel Nurmi)

As more and more Jenkins users ship docker containers, it is worth thinking about the security implications of this model, where the variance in software being included by developers has increased dramatically from previous models. Security implications in this context include what makes up the image, but also the components of the app that get bundled into your image. Docker images are increasingly becoming a “unit of deployment”, and if you look at a typical app (especially if it is a microservice), much of the components, libraries, and system are someone else’s code.

Anchore exists to provide technology to act as a last line of defense, verifying the contents of these new deployable units against user specified policies to enforce security and compliance requirements. In this blog you will get a quick tour of this capability, and how to add the open-source Anchore Engine API service into your pipeline to validate that the flow of images you are shipping comply with your specific requirements, from a security point of view.

Key among the fundamental tenets of agile development is the notion of “fail fast, fail often”, which is where CI/CD comes in: A developer commits code into the source code repository, such as git, that automatically triggers Jenkins to perform a build of the application that is then run through automated tests. If these tests fail the developer is notified immediately and can quickly correct the code. This level of automation increases the overall quality of code and speeds development.

While some may feel that “fail fast” sounds rather negative (especially regarding security), you could better describe this process as “learn fast” as mistakes are found earlier in the development cycle and can be easily corrected. The increased use of CI/CD platforms such as Jenkins has helped to improve the efficiency of development teams and streamlined the testing process. We can leverage the same CI/CD infrastructure to improve the security of our container deployments.

For many organizations the last step before deploying an application is for the security team to perform an audit. This may entail scanning the image for vulnerable software components (like outdated packages that contain known security vulnerabilities) and verifying that the applications and OS are correctly configured. They may also check that the organization’s best practices and compliance policies have been correctly implemented.

In this post we walk through adding security and compliance checking into the CI/CD process so you can “learn fast” and correct any security or compliance issues early in the development cycle. This document will outline the steps to deploy Anchore’s open source security and compliance scanning engine with Jenkins to add analytics, compliance and governance to your CI/CD pipeline.

Anchore has been designed to plug seamlessly into the CI/CD workflow, where a developer commits code into the source code management system, which then triggers Jenkins to start a build that creates a container image. In the typical workflow this container image is then run through automated testing. If an image does not meet your organization’s requirements for security or compliance then it makes little sense to invest the time required to perform automated tests on the image, it would be better to “learn fast” by failing the build and returning the appropriate reports back to the developer to allow the issue to be addressed.

Anchore has published a plugin for Jenkins which, along with Anchore’s open source engine or Enterprise offering, allows container analysis and governance to be added quickly into the CI/CD process.

Requirements

This guide presumes the following prerequisites have been met:

-

Jenkins 2.x installed and running on a virtual machine or physical server.

-

Anchore-Engine installed and running, with accessible engine API URL (later referred to as <anchore_url>) and credentials (later referred to as <anchore_user> and <anchore_pass>) available - see Anchore Engine overview and installation.

Anchore’s Jenkins plugin can work with single node installations or installations with multiple worker nodes.

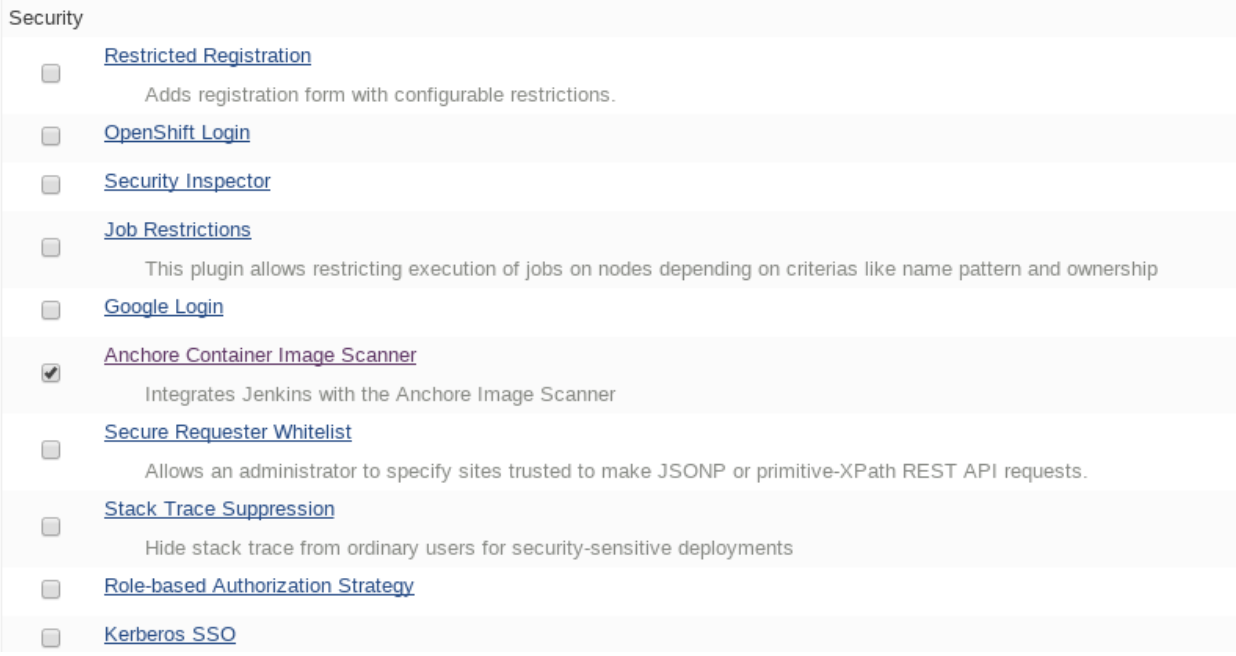

Step 1: Install the Anchore plugin

The Anchore plugin has been published in the Jenkins plugin registry and is available for installation on any Jenkins server. From the main Jenkins menu select Manage Jenkins, then Manage Plugins, select the Available tab, select and install Anchore Container Image Scanner.

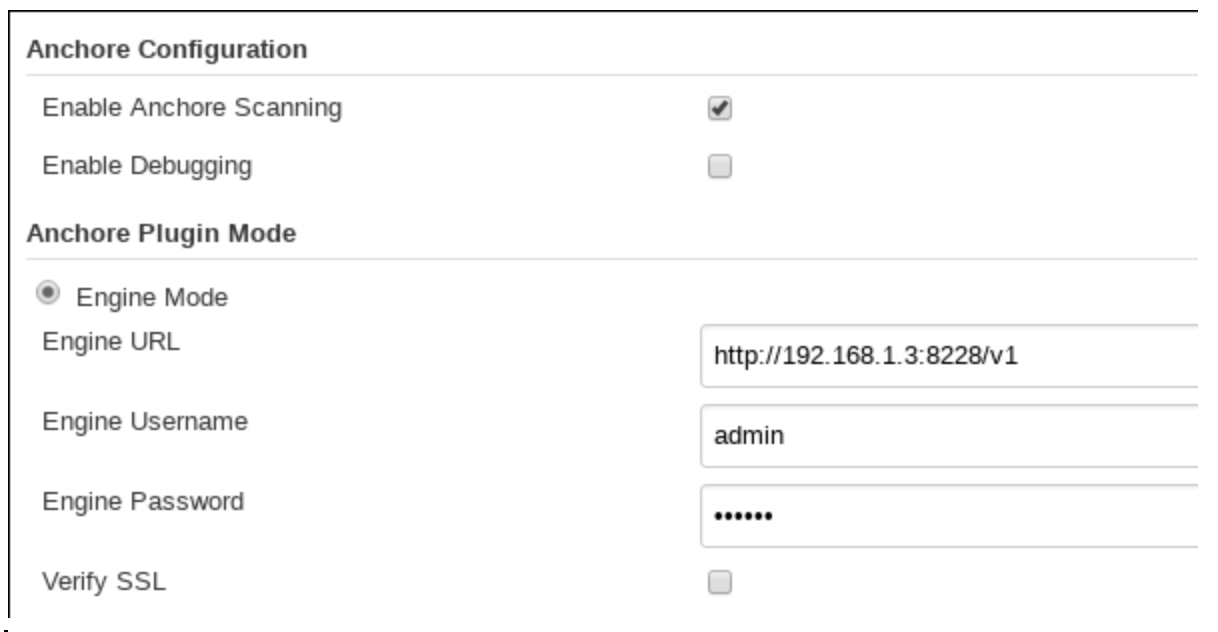

Step 2: Configure Anchore Plugin.

Once the Anchore Container Image Scanner plugin is installed - select Manage Jenkins menu click Configure System, and locate the Anchore Configuration section. Select and enter the following parameters in this section:

-

Click Enable Anchore Scanning

-

Select Engine Mode

-

Enter your <anchore_url> in the Engine URL text box - for example: http://your-anchore-engine.com:8228/v1

-

Enter your <anchore_user> and <anchore_pass> in the Engine Username and Engine Password fields, respectively

-

Click Save

An example of a filled out configuration section is below, where we’ve used “http://192.168.1.3:8228/v1” as <anchore_url>, “admin” as <anchore_user> and “foobar” as <anchore_pass>:

At this point the Anchore plugin is configured on Jenkins, and is available to be accessed by any project to perform Anchore security and policy checks as part of your container image build pipeline.

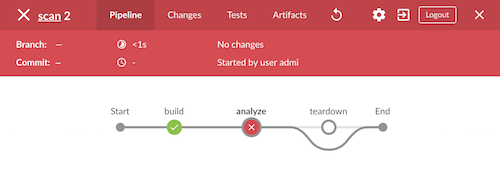

Step 3: Add Anchore image scanning to a pipeline build.

In the Pipeline model the entire build process is defined as code. This code can be created, edited and managed in the same way as any other artifact of your software project, or input via the Jenkins UI.

Pipeline builds can be more complex including forks/joins and parallelism. The pipeline is more resilient and can survive the master node failure and restarts. To add an Anchore scan you need to add a simple code snippet to any existing pipeline code that first builds an image and pushes it to a docker registry. Once the image is available in a registry accessible by your installed Anchore Engine, a pipeline script will instruct the Anchore plugin to:

-

Send an API call to the Anchore Engine to add the image for analysis

-

Wait for analysis of the image to complete by polling the engine

-

Send an API call to the Anchore Engine service to perform a policy evaluation

-

Retrieve the evaluation result and potentially fail the build if the plugin is configured to fail the build on policy evaluation STOP result (by default it will)

-

Provide a report of the policy evaluation for review

Below is an example end-to-end script that will make a Dockerfile, use the docker plugin to build and push the a docker container image to dockerhub, perform an Anchore image analysis on the image and the result, and cleanup the built container. In this example, we’re using a pre-configured docker-exampleuser named dockerhub credential for dockerhub access, and exampleuser/examplerepo:latest as the image to build and push. These values would need to be changed to reflect your own local settings, or you can use the below example to extract the analyze stage to integrate an anchore scan into any pre-existing pipeline script, any time after a container image is built and is available in a docker registry that your anchore-engine service can access.

pipeline {

agent any

stages {

stage('build') {

steps {

sh'''

echo 'FROM debian:latest’ > Dockerfile

echo ‘CMD ["/bin/echo", "HELLO WORLD...."]' >> Dockerfile

'''

script {

docker.withRegistry('https://index.docker.io/v1/', 'docker-exampleuser') {

def image = docker.build('exampleuser/examplerepo:latest')

image.push()

}

}

}

}

stage('analyze') {

steps {

sh 'echo "docker.io/exampleuser/examplerepo:latest `pwd`/Dockerfile" > anchore_images'

anchore name: 'anchore_images'

}

}

stage('teardown') {

steps {

sh'''

for i in `cat anchore_images | awk '{print $1}'`;do docker rmi $i; done

'''

}

}

}

}

This code snippet writes out the anchore_images file that is read by the plugin to determine which image is to be added to Anchore Engine for scanning.

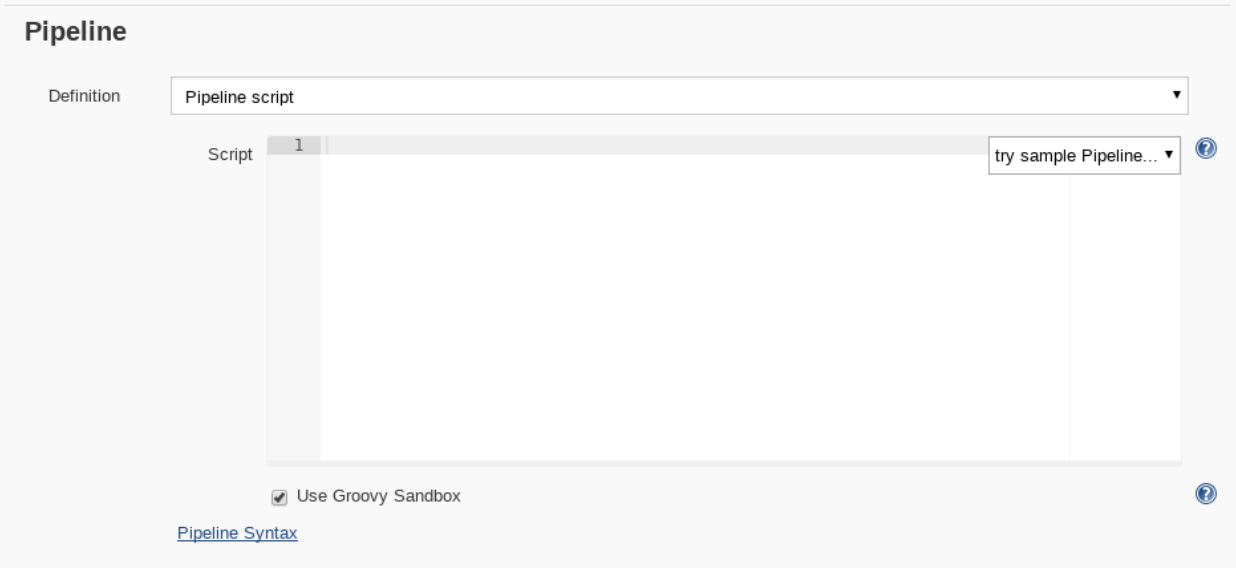

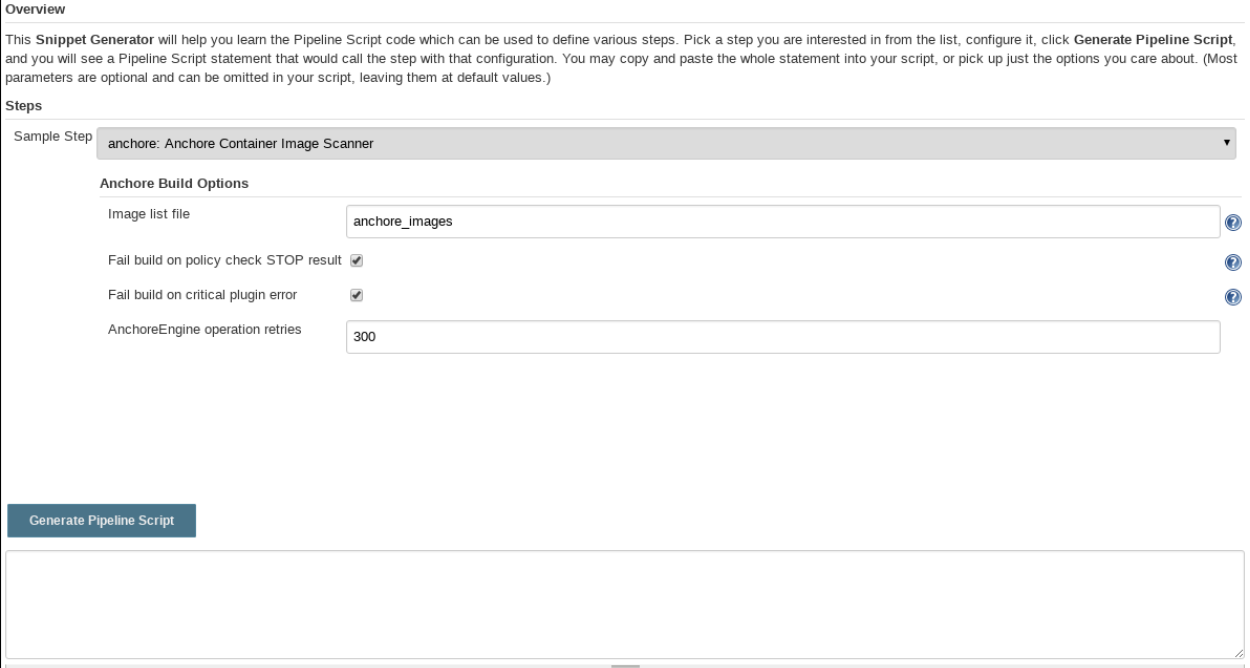

This code snippet can be crafted by hand or built using the Jenkins UI, for any Pipeline project. In the project configuration, select Pipeline Syntax from the Project.

This will launch the Snippet Generator where you can enter the available plugin parameters and press the Generate Pipeline Script button which will produce a snippet that you can use as a starting point.

Using our example from above, next we save the project:

Note that once you are happy with your script, you could also check it into a Jenkinsfile, alongside the source code.

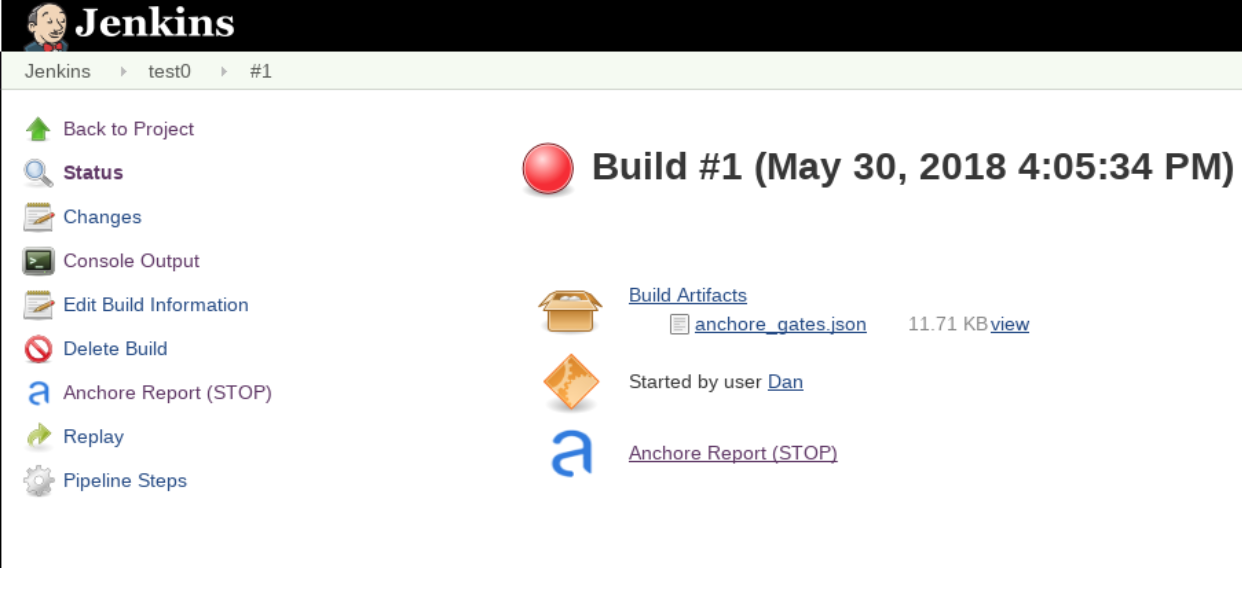

Step 4: Run the build and review the results.

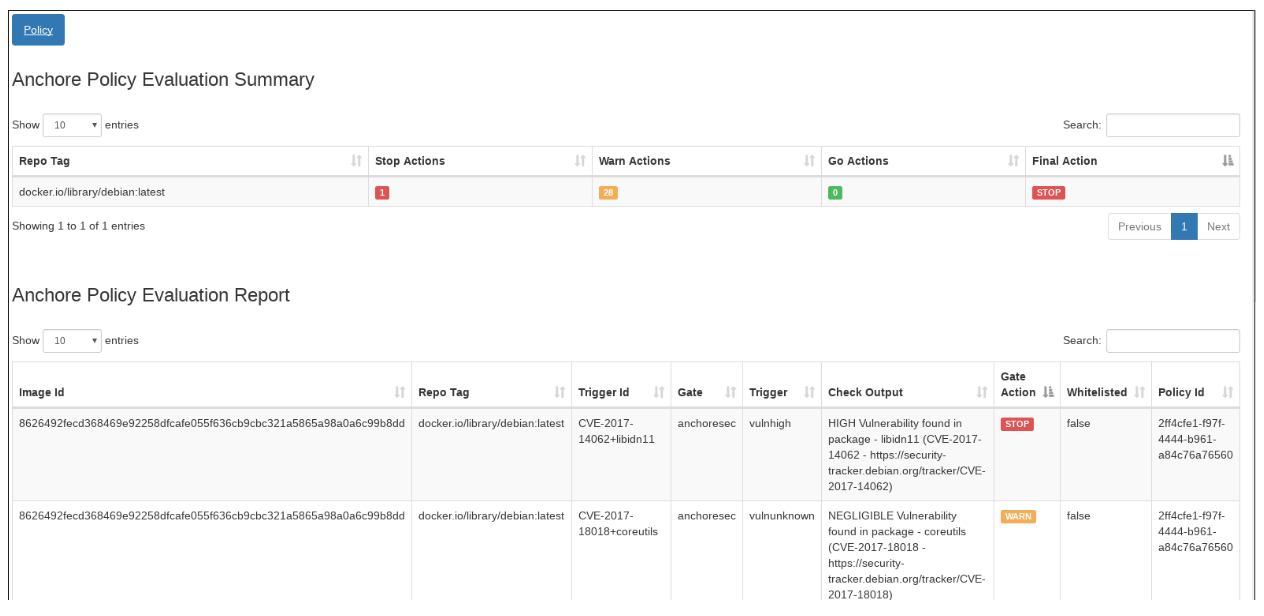

Finally, we run the build, which will generate a report. In the below screenshots, we’ve scanned the image docker.io/library/debian:latest to demonstrate some example results. Once the build completes, the final build report will have some links that will take you to a page that describes the result of the Anchore Engine policy evaluation and security scan:

In this case, since we left the Fail build on policy STOP result as its default (True), the build has failed due to anchore-engine reporting a policy violation. In order to see the results, click the Anchore Report (STOP) link:

Here, we can see that there is a single policy check that has generated a ‘STOP’ action, which triggered due to a high severity vulnerability being found against a package installed in the image. If there were only ‘WARN’ or ‘GO‘ check results here, they would also be displayed, but the build would have succeeded.

With the combination of Jenkins pipeline project capabilities, plus the Anchore scanner plugin, it’s quick and easy to add container image security scanning and policy checking to your Jenkins project. In this example, we provide the mechanism for adding scanning to a Jenkins pipeline project using a simple policy that is doing an OS package vulnerability scan, but there are many more policy options that can be configured and loaded into Anchore Engine ranging from security checks to your own site-specific best practice checks (software licenses, package whitelist/blacklist, dockerfile checks, and many more). For more information about the breadth of Anchore policies, you can find information about Anchore Engine configuration and usage here.

For more information on Jenkins Pipelines and Anchore Engine, check out the following information sources: